MCP: The USB-C Moment for APIs [And Why You Should Care]

![MCP: The USB-C Moment for APIs [And Why You Should Care]](https://www.dariocuevas.com/images/APIvsMCP.png)

MCP: The USB-C Moment for APIs [And Why You Should Care]

🔌 What is MCP? The Universal Connector for AI

Remember the chaos of the early 2010s? Every device had its own charger. iPhones had their 30-pin connector, Android phones used micro-USB, laptops needed proprietary adapters, and your desk looked like a cable graveyard. Then USB-C arrived, and suddenly, one cable could charge your phone, laptop, tablet, and headphones. It was a game-changer—not because it was more powerful, but because it was universal.

That's exactly what's happening in software right now with the Model Context Protocol (MCP).

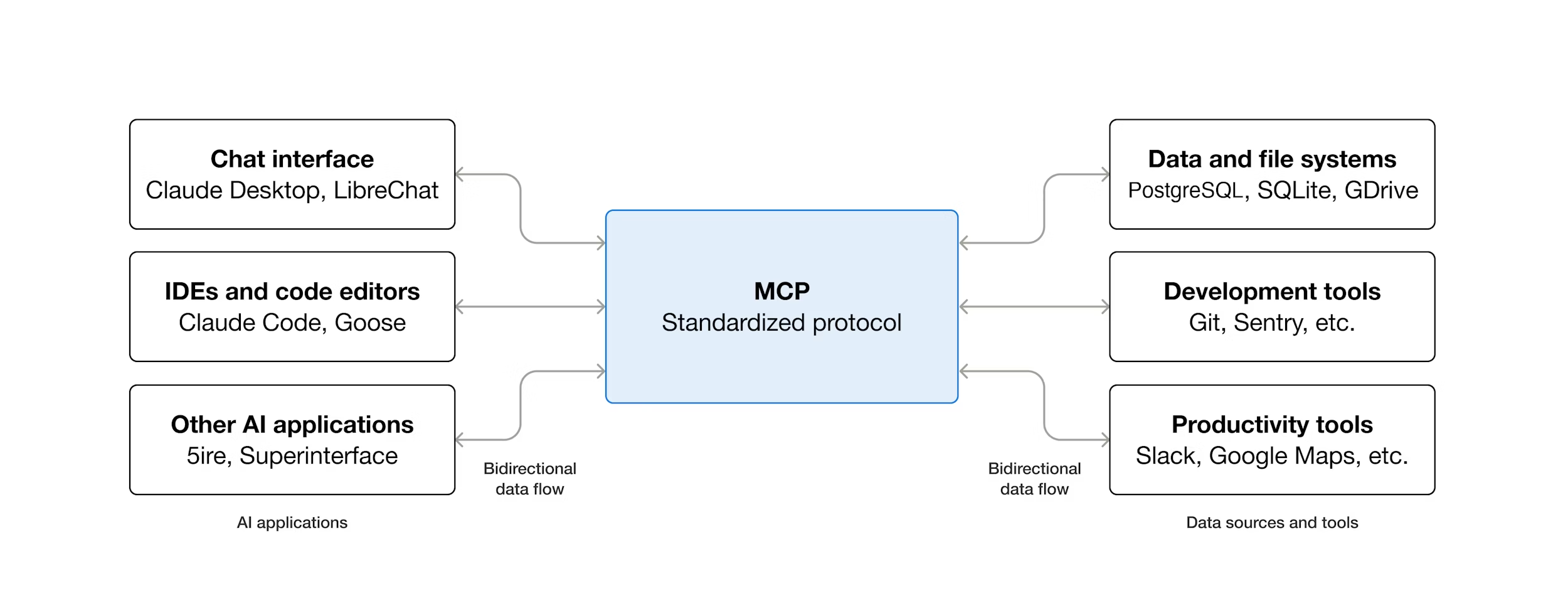

Announced by Anthropic in November 2024 and quickly adopted by OpenAI, Google DeepMind, and major development platforms like Zed, Replit, and Sourcegraph, MCP is an open standard that defines how AI systems connect to data sources and tools. Think of it as the USB-C port for AI applications—a standardized way to plug AI models into databases, business tools, content repositories, and development environments.

Before MCP, every new data source required its own custom connector. Want to connect your AI assistant to Google Drive? Build a connector. Need Slack integration? Build another one. GitHub? Another custom implementation. This created what Anthropic calls the "N×M problem": N data sources times M AI applications equals a maintenance nightmare.

MCP changes this equation. Instead of building separate connectors for each combination, developers now build against a single, universal protocol. The AI takes care of how to connect—you just define what to connect to.

Source: Anthropic

Source: Anthropic

🔧 The API Problem Nobody Talks About

If you've worked with APIs long enough, you know the pain: every change is a potential disaster.

Here's the reality of traditional API development:

Breaking Changes Are Inevitable—and Expensive

When you modify an API endpoint—changing a parameter from optional to required, updating a response format, or removing a field—you risk breaking every client application that depends on it. One small change can cascade into customer complaints, emergency patches, and late-night war rooms. Companies like Salesforce support up to 21 different API versions simultaneously (yes, versions going back to 2014) just to avoid breaking their customers' integrations.

Backward Compatibility Is a Full-Time Job

Maintaining backward compatibility means testing every change against every supported version. It means writing extensive migration guides, setting up automated regression tests, and dedicating engineering resources to support legacy versions that should have been deprecated years ago. As one API expert put it: "APIs are forever"—once you release an interface, it becomes a dependency that's nearly impossible to eliminate without disrupting users.

The Version Management Treadmill

To manage changes, teams implement versioning strategies—URI versioning (/v1/users, /v2/users), header versioning, semantic versioning (major.minor.patch). Each approach has trade-offs, but all share one problem: every version multiplies your maintenance burden. More versions mean more testing, more documentation, more infrastructure costs, and more complexity for both API providers and consumers.

The Hidden Costs

Consider the tangible costs when APIs break or change:

- Engineering hours diverted from new features to emergency fixes

- Customer support teams overwhelmed with integration-related tickets

- Sales teams dealing with frustrated customers instead of closing deals

- Partner ecosystems damaged when strategic integrations break

- Technical debt accumulating from quick workarounds

The fundamental issue? Tight coupling between services. When Service A calls Service B's API, any change to B's contract potentially requires changes to A's code. This creates fragility and slows down innovation.

💡 How MCP Flips the Script: Abstraction Over Implementation

Here's where MCP fundamentally changes the game—and why it matters for your platform architecture.

The Abstraction Advantage

Traditional APIs are implementation contracts: "Call this URL with these exact parameters in this specific format, and I'll return data in this precise structure." Change any part of that contract, and you break the integration.

MCP operates at a different level—it's a capability contract: "I can provide user data, execute searches, and perform actions." The protocol handles the translation between what the AI wants and how your service provides it. When you update your service's internals, the MCP server adapts the interface automatically, and the AI on the other side continues working without code changes.

Real-World Example: Two Services, Zero Breaking Changes

Imagine you have two services in your platform:

- Service A: A recommendation engine powered by AI

- Service B: A customer database with purchase history

Traditionally, Service A makes API calls to Service B: GET /api/v1/customers/{id}/purchases. If Service B's team decides to restructure their data model—say, splitting purchases into subscriptions and one-time transactions—they'd need to:

- Create a new API version (

/api/v2/) - Update documentation

- Notify Service A's team

- Wait for Service A to update their code

- Coordinate deployment

- Maintain both versions during migration

With MCP: Service B exposes an MCP server that describes its capabilities: "I can retrieve customer purchase history." The AI in Service A connects via MCP. When Service B restructures their database, they update their MCP server implementation—but the interface remains the same. Service A's AI continues requesting "customer purchase history," and the MCP protocol handles the translation. No code changes. No coordination. No breaking changes.

The AI Does the Heavy Lifting

This is the crucial insight: AI models are incredibly good at working with varying data structures and adapting to different formats. By letting AI handle the "how" of connection through a standardized protocol, MCP removes the brittleness of traditional APIs while preserving—and even enhancing—functionality.

Early Success Stories

Companies are already seeing the benefits:

- Block (formerly Square) has integrated MCP into their systems, citing it as foundational to making AI innovation "accessible, transparent, and rooted in collaboration"

- Development platforms like Zed, Replit, Codeium, and Sourcegraph are using MCP to give AI coding assistants real-time access to project context without building custom connectors for each tool

- Apollo and other blockchain firms are leveraging MCP to connect AI to complex data systems

🚀 The Bigger Picture: What This Means for Platform Architecture

If MCP is the USB-C moment for APIs, we're standing at the edge of something bigger than just easier integrations.

The End of Integration Tax

Today, every new service integration carries an "integration tax"—the time, cost, and risk of building and maintaining custom connectors. MCP dramatically reduces this tax. In a world where services speak a common protocol and AI handles adaptation, the cost of adding new capabilities to your platform drops significantly.

Microservices Without the Maintenance Nightmare

Microservices promised flexibility and independent deployability. But in practice, service-to-service communication through REST APIs created tight coupling and versioning hell. MCP offers a path to truly independent services: each service exposes its capabilities through MCP, and AI-powered clients consume those capabilities without brittle contracts.

The Questions Worth Asking

As you think about your platform's future, consider:

- What if your services could evolve independently without coordination overhead?

- What if adding a new data source to your AI features took hours instead of weeks?

- What if backward compatibility became the default instead of a constant struggle?

A Word of Caution

MCP is young. Security researchers have already identified concerns around prompt injection, tool permissions, and trust verification. Like any protocol in its early days, there will be growing pains. But the direction is clear: standardized, AI-mediated connections are likely the future of how software components talk to each other.

The Bottom Line

MCP won't replace traditional APIs overnight—nor should it. But for AI-powered applications, agent-based workflows, and platforms seeking to reduce integration complexity, MCP represents a fundamental shift: from rigid contracts to adaptive capabilities, from tight coupling to flexible orchestration.

The USB-C revolution didn't happen because one cable was slightly better—it happened because everyone agreed on a standard. MCP might just be that agreement for the AI era.

What's your biggest API pain point? Could a protocol like MCP solve it? The conversation is just beginning.