🫧 AI Bubble or Golden Age? Why Most Engineers Are Using AI Wrong (And the 7% Who Get It)

🫧 AI Bubble or Golden Age? Why Most Engineers Are Using AI Wrong (And the 7% Who Get It)

🔥 1. Introduction - We've Been Here Before

If you've been following the AI hype lately, you've probably heard two opposing narratives: "AI is going to change everything!" versus "AI is an overvalued bubble about to burst." Sound familiar? It should. We've literally seen this movie before—in the early 2000s.

Remember when every company was a ".com" company? When Terra Networks — once valued at €13 billion — became the clearest example of dot-com excess, only to collapse spectacularly. When the NASDAQ crashed 76.81%, wiping out $5 trillion in market value?

Here's the thing: the internet didn't die. The bubble did.

And that's exactly where we are with AI right now. According to Carlota Pérez's groundbreaking research in "Technological Revolutions and Financial Capital," every major technology follows the same pattern:

"After a phase of chaotic installation and financial bubble that explodes, comes a deployment period or golden age where society finally enjoys the technology already depurated and productive."

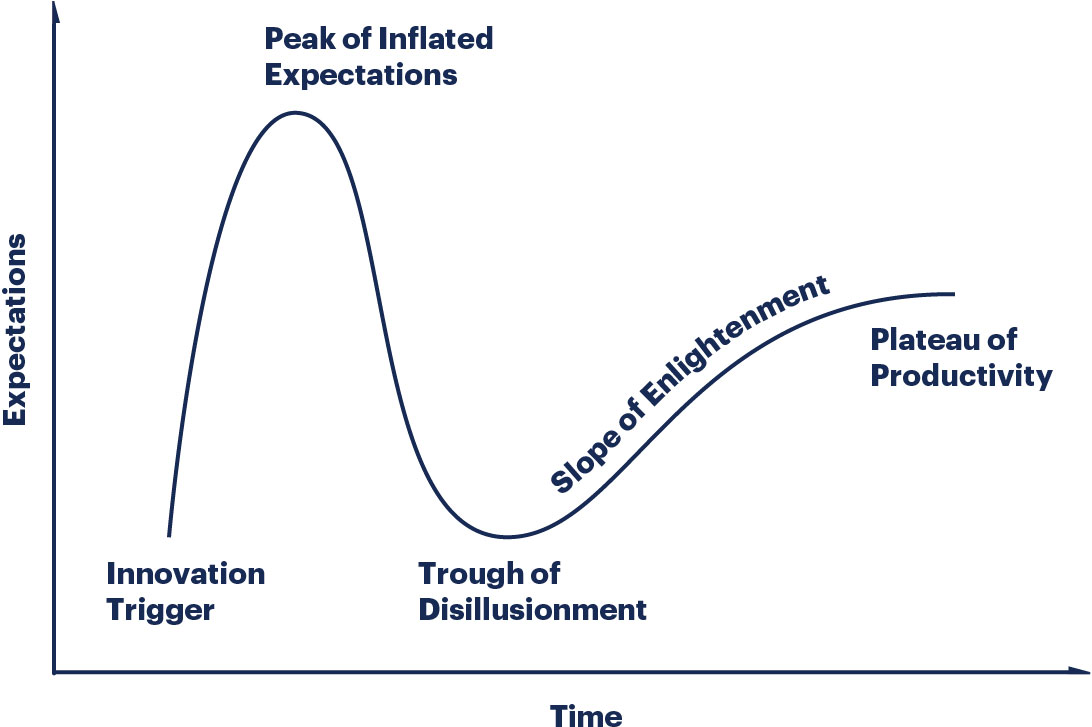

We're not in an AI winter. We're in the Frenzy phase just before the turning point—exactly where the dot-com bubble was in 1999. The Gartner Hype Cycle shows us at the "Peak of Inflated Expectations," about to descend into the "Trough of Disillusionment" before climbing to the "Plateau of Productivity."

The evidence?

Palantir: The data analytics and AI platform company—which uses AI/ML to help organizations make sense of massive datasets—is trading at 254 times its earnings, the highest valuation in the S&P 500. Michael Burry (yes, THAT Michael Burry from The Big Short movie—if you haven't seen it, add it to your list) just bet $912 million against it. Even after strong earnings, the stock dropped 8% due to valuation concerns.

Meta's AI Talent War: Meta is currently spending astronomical sums stealing AI experts from competitors. Are they building the future—or desperately trying to destroy the competition while pumping up their AI portfolio to justify valuations? The aggressive talent grab smells more like the late-90s "get big fast" mentality than sustainable growth.

LLM Scaling Hits a Wall: Evolution is showing diminishing returns. When you double training costs, quality increases only ~1%. As Databricks CTO Matei Zaharia put it: "We've put all the data on the internet into these models already." Even Sam Altman and major AI labs are quietly acknowledging that pre-training scaling isn't delivering the exponential improvements they promised.

But here's what you need to understand: This isn't the end. It's the beginning of the real AI revolution.

The dot-com crash didn't kill Amazon, Google, or eBay. It killed the companies that used the internet wrong—like Terra. The AI "correction" won't kill AI—it will kill companies and careers that are using it wrong.

And right now? Sam Altman just revealed that only 7% of ChatGPT users leverage AI's full potential. If that's true for the general population, imagine how many engineers are making the same mistake.

💡 2. The 7% Problem - How to Actually Use AI (And Why Most Engineers Don't)

In August 2025, OpenAI CEO Sam Altman accidentally revealed something shocking: Only 7% of paying ChatGPT users were using reasoning models before GPT-5's release. Among free users? Just 1%.

Think about that. People are paying more than $20/month for a Ferrari and only using first and second gear.

As the Fast Company article put it: "Not using reasoning models is like buying a car, using only first and second gear, and wondering why it's not easy to drive."

But it gets worse. Even after GPT-5's release—where the difference between "fast" and "thinking" modes is crystal clear—only 25% of paying users choose thoroughness over speed.

Now, these statistics are for general ChatGPT users—not engineers specifically. But here's the thing: if only 7% of ALL users understand how to use AI properly, there's no reason to believe engineers are doing much better. In fact, given how many engineers I see copy-pasting AI code without understanding it, the percentage might be even lower in our field.

Why This Matters for Engineers

Altman also revealed something that should make us think:

"People rely on ChatGPT too much. Young people say things like, 'I can't make any decision in my life without telling ChatGPT everything.' That feels really bad to me."

The problem isn't that people use AI. It's HOW they use it.

From what I've observed, most engineers treat AI like Stack Overflow: quick question, copy-paste answer, move on. But here's what the 7% who use it correctly understand:

✅ The "YOU Pilot, AI Assists" Principle:

Think of AI like pair programming:

- AI = Junior Developer: Fast, generates lots of code, knows syntax

- YOU = Senior Engineer: Validates, understands context, makes final decisions

WRONG WAY (What most people do):

- Quick prompt: "Fix this bug"

- Copy-paste without understanding

- Expect instant, perfect code

- Trust AI blindly

RIGHT WAY (What the 7% do):

- Code Review: "Analyze this PR for potential issues, consider edge cases, evaluate security implications, then suggest improvements"

- Debugging: "Trace the root cause of this error, evaluate multiple solutions with trade-offs, then recommend the optimal approach"

- Architecture: "Compare these 3 design patterns for this use case, analyze scalability and maintainability, then propose a solution with rationale"

- Automation: "Simulate these scenarios, analyze results for patterns, identify optimization opportunities, then provide recommendations with confidence levels"

The key difference? Give AI time to THINK. Use reasoning modes. Don't treat it like a search engine—treat it like a junior or senior colleague you're mentoring or sound-boarding.

Engineering Translation: Real-World Applications

- Code Review Assistant: AI spots patterns and suggests improvements → YOU validate architecture and business logic

- Test Generation: AI creates test cases → YOU ensure coverage of critical paths

- Documentation: AI drafts technical docs → YOU refine for accuracy and context

- Debugging Partner: AI suggests potential causes → YOU understand root cause and systemic implications

- Boilerplate Automation: AI generates repetitive code → YOU architect the system design

Remember: AI doesn't understand causality, business context, or domain expertise. It's a pattern-matching tool. An incredibly powerful one—but still just a tool.

You remain the architect of Matrix. AI is your assistant (Agent Smith?).

⚙️ 3. Will AI Take Your Engineering Job? (Spoiler: It's Complicated)

Let's address the elephant in the room with actual data, not fear-mongering.

The Alarming Headlines Are Real:

- Microsoft: 30% of code is now AI-written. 40% of recent layoffs? Software engineers.

- 2025 (first 6 months): 77,999 tech jobs lost directly to AI

- Entry-level hiring: Down 25% at Big Tech companies

- IT unemployment: Jumped from 3.9% to 5.7% in one month (January 2025)

- Goldman Sachs prediction: AI could replace 300 million jobs globally

Scary, right? But here's the other side:

The Job Creation Data:

- World Economic Forum: 170 million NEW jobs by 2030 vs. 92 million displaced = net +78 million jobs

- AI/ML Engineer roles: 41.8% yearly growth

- Data Scientist positions: 10% year-over-year growth

- 350,000 new AI-related roles emerging (didn't exist 5 years ago)

- Indian IT firms: Trained 775,000 employees in AI (upskilling, not firing)

The Truth: AI Isn't Smart—It's Math

I remember studying neural networks at university. You know what they are? Mathematical matrices. You feed input, it processes through layers of weights and biases, and produces output. After training on massive datasets, it learns patterns.

But here's what it DOESN'T do:

- Understand context

- Grasp causality

- Have common sense

- Validate its own output (sometimes is does 🤔)

- Make strategic decisions

AI is an advanced word-completion tool. A really, REALLY good one that's been trained on trillions of tokens. It predicts what comes next based on patterns, but it doesn't understand WHY.

Think of it as autocomplete on steroids. It looks clever because it's seen so many examples—but it's not actually thinking.

So What's Really Happening?

AI is replacing:

- Copy-paste coders who don't understand their code

- Engineers who can't validate AI output

- Routine, repetitive coding tasks

- Entry-level positions doing manual work

AI is NOT replacing:

- Architects who design systems

- Senior engineers who understand trade-offs

- Domain experts who know business context

- Problem solvers who orchestrate solutions

- Engineers Managers who coach and mentor engineers in the previous

The new reality: One engineer using AI can do the work of three engineers. But that means you need to be that ONE engineer, not one of the three.

The Data Shows the Split:

- 54% of IT professionals (pretty sure is even close to 90%) already use AI daily → thriving

- Engineers who resist adaptation → facing displacement

The jobs aren't going away. They're evolving:

- From writing code → to orchestrating solutions

- From manual tasks → to strategic thinking

- From individual contributor → to AI-amplified engineer

🚀 4. Your Survival Guide - The Golden Age is Coming

Here's what the dot-com crash taught us: The companies that survived weren't the ones with the best technology. They were the ones that used technology to solve real problems.

Amazon survived because it actually delivered value—not because it had the flashiest website. Google survived because it made search work—not because it had the coolest algorithm.

The same will be true for AI.

Three Truths About the AI Revolution:

1. AI is a Tool, Not Magic

- It's mathematical pattern matching, not sentient intelligence

- It assists your thinking; it doesn't replace it

- You validate, AI generates

2. The Job Isn't Dying—It's Evolving

- Junior tasks: Automated (good riddance to boilerplate)

- Senior responsibilities: Amplified (strategy, architecture, validation)

- Your role: Shift from coder to orchestrator

3. Adapt or Get Left Behind

- Not "AI will replace you"

- But "An engineer using AI will replace an engineer who doesn't"

- The copilot principle is your survival strategy

Your Action Plan:

This Week:

- Start using reasoning modes in ChatGPT (be in the 7%, not the 93%)

- Stop copy-pasting. Start validating.

- Give AI prompts that require thinking, not just quick answers

This Month:

- Learn one AI tool deeply (GitHub Copilot, Cursor, Claude)

- Practice the copilot approach on real work

- Understand when NOT to use AI (architectural decisions, business context, critical validation)

This Year:

- Master AI-assisted workflows

- Focus on skills AI can't replace: architecture, domain expertise, strategic thinking, good practices

- Position yourself as the engineer who amplifies AI, not competes with it

The Golden Age is Coming

Just like the internet went from bubble to essential infrastructure, AI will go from hype to productivity engine. The question isn't IF—it's WHEN.

And when that golden age arrives, there will be two types of engineers:

- Those who learned to use AI as a copilot (the minority who get it) → 3x productivity, senior roles, job security

- Those who use AI like 93% of users do (quick copy-paste, no thinking) → competing with AI instead of collaborating with it

Carlota Pérez's research shows us the pattern. The Gartner Hype Cycle shows us the timeline. The data shows us the split.

The bubble will burst. The technology will stay. The question is: which side will you be on?

What's your take? Are you using AI like the 7% (thinking mode, validation, copilot)? And more importantly—do you think engineers are doing any better than the general population? Drop a comment below and let's ignite the mother of all engineering debates. 👇

📚 Sources & Further Reading:

- Gartner Hype Cycle Methodology

- Carlota Pérez: Technological Revolutions and Financial Capital

- Fast Company: Most People Use ChatGPT Wrong

- TechCrunch: AI Scaling Laws Showing Diminishing Returns

- Indeed: AI at Work Report 2025